What's the Future of IDEs?

November 2021

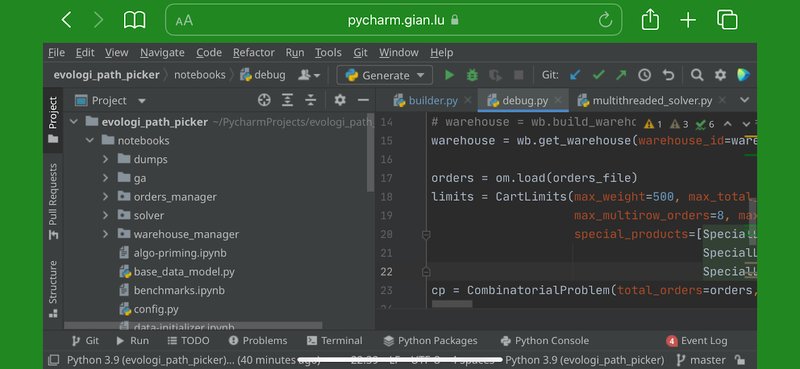

During an idle Sunday evening, a few weeks ago, I played with JetBrains’ Projector: it’s IntelliJ — one of the most powerful IDEs around — installed on a remote server and accessible through the browser.

I wanted to see how I might offload some heavy computing tasks, like training and evaluating large machine learning models, off of my local computer, without compromising my coding user experience.

It’s a surprisingly easy setup to build. It took me less than an hour, from hitting “Create instance” on AWS to having a fully-fledged AI project running on my iPad Pro.

It felt like magic.

==PyCharm running on my iPhone==

The concept is bold and compelling: moving the development environment to a remote server, instead of keeping it on a local machine. Effectively, a centralized form of workflow: a mainframe. What is localhost, at that point?

Of course, the idea is not unique to JetBrains. Microsoft is going in the same direction with Visual Studio Code, now available online — in particular on any GitHub repository. Same goes for smaller companies, like Replit, trying to turn IDEs into a multiplayer experience.

Coding through your own server no longer bounds you to a local device, as it conceptually separates the machine you physically interact with from the machine running the actual code.

This opens several new doors and opportunities.

A matter of software and hardware independence

Software-wise, a fully remote environment helps avoid dual-boot madness and incompatible-drivers fighting: my dev environment sits on an Ubuntu server, making tooling dramatically easier. All the while I can keep Lightroom, Cinema 4D, and Photoshop on my local macOS machine. Technically speaking you might attempt to dockerize parts of the Adobe Creative Cloud, but it’s legally murky and worryingly hackish (and it doesn’t sound right anyway).

Even more interesting is the hardware independence. By delegating the computing power to specialized providers it’s possible to unlock a tremendous amount of flexibility, all the while not having to fight against typical trade-offs like CPU-vs-battery, HDD-vs-price, battery-vs-weight.

I can code on my razor-thin iPad, with an XHDR display, long battery life, and relatively small internal drive, while working in a Linux environment with CUDA drivers and access to Nvidia GPUs or Google TPUs. Should I temporarily need more RAM due to a large model to train, I can expand (and then contract) what I need the moment I need it, without touching my rig.

This makes the setup economically efficient.

If I were to keep my remote instance running 24/7, I would pay roughly $35/m. However, I can have it switched off during, say, weekends (or even nights). That would save between 35% and 50% of the cost. On the other hand, a fully-fledged MacBook Pro depreciates at a rate of $50/m, making it 40% to 50% more expensive. With such a margin, it’s possible to invest in a local machine better suited to act as a coding client: great screen, lightweight, good battery life.

It scales well too: the larger the team, the more efficient a remote development server becomes. This is already happening in higher-volume scenarios, like in data science teams: it’s fairly typical to have a Jupyter notebook running on a remote instance, with the team iterating AI models while remotely connected to it. With a fully-fledged cloud IDE supporting multiplayer collaboration (as JetBrains’ Code With Me, or Visual Studio’s Live Share), this concept can theoretically be applied across different development teams, significantly compressing costs.

As with every good idea, I’m hardly the first to have thought about this.

MightyApp, for instance, virtualizes Chrome: they want you to stop paying for expensive hardware and outsource the computing power needed to run modern web apps to them — accessing economies of scale on both performance and maintenance costs. It does make sense.

However, privacy implications can be profound. That’s one of the reasons why I don’t think that proprietary cloud IDEs will be the future of modern development, as Replit and Microsoft would like us to think. Developers might be less likely to take risks, or optimize their working schedule however they see fit, if every keystroke is being tracked and aggregated. Learning and working speed would be hampered, and developers’ performance would likely regress to the mean of their team. Nobody wants that.

But privacy is only part of the story. Most (all?) the benefits of remote development servers reside in controlling the full stack, especially the server itself.

Prevent tweaking with the low-level machine, and you end up with no more hacking, and much more micromanagement opportunities. What compels me the most about cloud IDEs is outsourcing raw computing power while keeping control over the underlying hardware.

Is this the future of coding?

I’m not sure.

Despite my childlike excitement of running Tensorflow on an iPad, at least two elements are cooling off my enthusiasm.

First, the cost-efficiency of the new M1 chips is a game-changer. Some people reported orders of magnitude improvements in their development speed. Why run your own development server when you have a local machine this powerful and this cheap? Directionally, PCs are keeping up their performance growth with the growing need for computational power. This is a trend moving in the exact opposite direction of needing to run an IDE in the browser, and one shouldn’t lightly discard trends.

Second, cross-platform mobile development has turned out to be a broken promise (with Apple and Google making quite some effort for this to happen). Native clients just work better. They’re snappier, have lower latency, and better interact with the local machine (think of shortcuts, for instance). It’s never been just a matter of computing power: modern phone chips fear nothing, and yet React Native apps just suck when compared to their native counterparts. Will a browser-mediated IDE ever deliver the same UX as a native local instance?

I’m torn.

I want to believe that we can (and should) delocalize development to remote servers, while investing R&D into making our local machines better clients to interact with instead of faster machines to run code on.

But we’re not there yet.

Chromebooks are going in the right direction, and not by chance. They’re an attempt to fulfill Google’s vision of “everything in the cloud”. I don’t think, though, that they could ever be a great machine to code on: terrible keyboard, terrible screen, terrible shell experience.

We need to take that concept to the next level: have a remote server do all the heavy-lift, while investing in both hardware and software tools that allow coders to operate such a server through an exceptional developer experience.

I’m curious to see whether someone will ever pick up that trail and change — once and for all — the way we interact with our machines.

• • •