The Dawn of a New Startup Era

September 2024

I’ve been thinking a lot, recently, about what the next wave of generational companies will look like. What’s the shape of fund returners going to be, in the next decade? How will they affect startup formation and VC performance?

For the people involved in their creation, generational companies yield an economic outcome many orders of magnitude larger than the rest of the market. This requires making a highly concentrated investment in a belief when only a few others think it’s a good idea.

By definition, if everybody agrees that something is worth investing in, the return on that investment will correspond to the market average. Formally, “market” is simply “consensus on correct pricing”. It’s the average, the norm, the wisdom of the crowd. Generational companies are outliers, happening several deviations away from that norm. If we accept this definition to be true, it must follow that consensus and successful startups are antithetical. The more obviously good an idea is, the more competition there will be, the more an entrepreneur's likelihood of succeeding will eventually regress to the mean — in this case, average market returns.

Successful founders defy consensus and are willing to bet asymmetrically big on their defiance with their money and time. Markets are informationally efficient, but seldom allocationally efficient1: when founders are successful, they don't necessarily have an information advantage, knowing something secret to everyone else, but they are eager to act on their conviction when few others are. They’re willing to make a concentrated bet when the rest of the market is averaging and diversifying.

It feels crazy through today’s eyes, but 20 years ago few were open to betting that the Internet would’ve become so big so quickly. The pioneers of the dot-com years showed that economic activity over the wire was possible, proving tech-market fit, but it wasn’t obvious how deep the Internet commercialization could’ve become. Timing and future size was still a question mark. A lot of folks thought Spotify couldn’t survive due to its novel business model.2 Many investors thought that Airbnb was a stupid idea because people would get murdered when renting strangers’ rooms.3 Most knew that collecting money online was a hard problem, but Patrick and John were the only ones willing to bet their lives to solve it with Stripe, as they thought it was worth it. All these investments were objectively crazy at the time and required a unique and somewhat contrarian view of what the future could look like.4

Fundamentally, the startup era of the last couple of decades has been about betting that the virtual entirety of consumer spending would move from the physical to the digital world, a bet on the speed of change of consumer behavior. As such, and despite being labeled as “tech companies”, the last generation of startups faced a growth challenge more than an engineering one: the largest alpha was in solving distribution and monetization more than raw technology. Although Facebook (the true precursor of this generation), Airbnb, Uber, DoorDash, Ramp, Rippling, Stripe, Flexport, and Slack have all overcome gigantic engineering challenges while scaling their products, none of them have been primarily about technical invention. Fundamentally they’ve all been about betting on the depth of market penetration of an existing (albeit new) technology.

Internet-market fit

It was not a sure bet – if anything, the contrary – which meant that incumbents took a very long time to start seeing the opportunity (and threat). Education institutions lacked fundamental material to build businesses on the Internet; when I went to college, in the early 2010s, there was still no engineering course available on mobile development, nor on reactive frontend development. And I’m old enough to remember that being a nerd meant being bullied: culture too was lagging behind, which in turn reflected in a low attendance to all-male computer classes in college. Dropping out of college to found or join a startup was radical, cringe, weird, contrarian, socially awkward. All this meant that there was relatively low competition from both peers and incumbents, compared to the magnitude of the actual economic opportunity.

Since the challenge was a growth one, founders could keep tech risk low, while focusing on adoption risk and iterating their product to get to product-market fit. As such, startups trying to get persistent differential returns focused primarily on network effects more than anything else, and they had enough time to conquer a defensible position in a rich niche before incumbents could take notice. Network effects became a key tenet of the playbook of the last startup era. The competition that mattered was from peer startups, and that meant that speed-to-market was the most important edge. “Move fast, break things” comes from this premise: in a winner-take-all market with no technical moat, being slow means being dead.

We now know that the bet was indeed the right one. The expected value of building “Internet apps” was large, and positive: the payoff of building with the cloud stack of web + mobile, weighted by the relative likelihood of success, was enough to offset the relatively low costs. Whenever an expected value is larger than 0, it’s time to dump money and scale. That’s how we got the startup factory model and monstrously large VC funds. Accelerators, incubators, pre-seed, seed, series A, B, C, D, elevator pitches, pitch decks, well-defined milestones, the industry going up to the right. Everybody who was in the game in the 2010s will remember the feeling of startups being churned out at an increased pace, while creativity was slowing down, and salaries were growing due to talent supply bottlenecks.

During these years we had a series of “golden ages”, from consumer Internet, to the sharing economy, to SaaS, to mobile apps. Overall, they all aimed at deepening the product-market fit of the Internet.

Could it last?

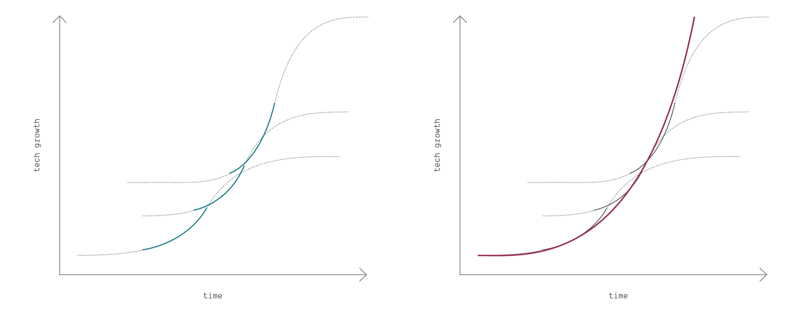

One of the most important lessons I was ever taught by one of my mentors is that true exponentials don’t exist. Look hard enough, and they’re always sigmoid curves. The way you have sustained exponentials over time is by concatenating these S-shaped curves in such a way that you end up with constant acceleration.

Persistent exponentials don’t exist because opportunities saturate. It’s just nature. As entropy will only increase, consensus on successful ideas will only go up, and with that, a regression of capital returns to the mean.

As the successful bet on the depth of the Internet began to fully pan out, and we had the triumph of its commercialization, consensus spread. And with that, the likelihood of both systematically and massively beating the market with Internet apps went down.

The mechanics are straightforward. With so much money being made, more and more people wanted to learn to code, which led to a huge increase in courses and education material (remember the bootcamps heyday?). Tech tooling to build software got more approachable and user-friendly. All this unlocked talent. An unprecedented inflow of VC money almost nullified the financial burden of starting a business. And, of course, AI has now enabled anyone to build a full production-ready web app in minutes only by talking in plain natural language. All this relaxed the supply constraints of cloud-based technology, producing an inherently deflationary pressure.

A reinforcing dynamic happened on the demand side as well. The incumbents that startups go up against today have either been startups themselves in the past (think of Facebook, or Uber), or have correctly identified that betting on the Internet is just a good idea (think of Chase Bank shipping a good mobile app, or Goldman Sachs with an internal team fully devoted to embedding LLMs in their business5). Contrary to before, now incumbents do manage to build great digital products, sometimes moving even faster than startups.6

This means that competition on traditional Internet-focused surfaces is now squared, as it includes both peers and incumbents, contrary to what used to be years ago. Existing businesses have begun being comfortable building rather than buying. Tech began to feel extractive, rather than additive: more and more people are in tech to make money, to climb the corporate ladder, to extract value, rather than adding it and growing the pie out of sheer curiosity of genuine love for the craft. Tech is attracting a different type of people than before, and everyone who's been in this industry long enough knows it and feels it. When considering “tech” to be “web and mobile apps”, most large companies are “tech” companies today. And stocks with tech being their core business model currently account for almost half of the S&P 500, up from less than ¼ only 5 years ago.7 This all produces lower demand, and having lower demand is also deflationary.

This pressure on margins pushed down the likelihood of delivering outsized returns. Producing persistently large differential returns at a factory scale will necessarily be harder when consensus is clear to everyone.

All this is a well-understood dynamic. That's why many VCs invested so heavily (and sometimes blindly) in AI: they see the expected value of the cloud-based asset class contracting, and they hope AI can be the next sigmoid curve in the general growth of venture-backed companies, after broadband, cloud, and mobile.

AI is a monumental, historical shift, but it’s much more similar to the wild 90s tech era than the startup-factory one, where tech-market fit hasn’t fully played out yet, the fog of war is thick, and bodies are beginning to pile up.

At a 30,000-feet, bird-eye view, I see three broad categories of software startups being founded today: AI frontends, AI infrastructure, and AI full-stack products. Frontends are great for founders, infra is great for investors, and full-stack is the happy marriage between the two.

AI frontends: great for founders, not so much for investors

AI frontends are products that embed a third-party LLM, and whose added value lies in the distribution to the end consumer. Some would disparagingly call them “wrappers”. The nature of their business is such that they’re still working with Internet-based technologies, still tackling an Internet growth challenge, and as such, are operating in an environment of high consensus. Which means that their expected value is relatively low.

With the latest coding agents, even people who can’t code can build and sell an AI app. Monetization and distribution techniques on the Internet are mostly figured out, there are few “growth hacks” 8 likely to yield an unfair advantage over the competition for long enough to get to deep network effects. That opportunity has been saturated during the previous startup era.

Startups need to have a clear path towards establishing a defensible position over the market9, and I don’t think that network effects are any longer a viable solution, likely to yield positive results: speed-to-market is too high and the technological barrier to entry is too low. The only scenario where these startups can build huge companies is by relying on a different kind of market defense, eg. by building AI-wrapping web app products for large markets with a high sales barrier to entry (like the military).

The general push-back to this argument is that 10 years ago we would never have said that web apps are “SQL wrappers”, or that mobile apps are “iPhone wrappers”. The idea being: any new technology is just a wrapper on previous technologies.

I disagree, and the reason is that API-mediated AI is not a new technology. It's just CRUD. There’s a qualitative difference between SQL 10 years ago and a REST endpoint today, which cannot be ignored. The stack “web app + Stripe + PLG/SLG playbook” is a well-known mix with very high consensus, both between startups and within incumbents. The barrier to entry is vastly lower today, and the supply of cloud-based products is significantly larger than it was 10 years ago.

This doesn’t mean that GPT-wrappers won’t be moderately successful. On the contrary, I believe that we're now entering a phase in startup history when building a $1m ARR business with a 5 people team is going to be not just possible, but easy. An environment of low defensibility and high consensus produces a fragmentation of entrepreneurship, as opposed to winner-take-all outcomes, which can be either amazing (for lifestyle founders) or bad news (for big funds).

Serving small market niches will be possible, and now economically profitable because of the reduced financial burden of building software. Product-market fit will be insanely high, because of the high level of focus, and competition will be manageable, given the small size of the opportunity.

However, getting out of those niches – the next sigmoid curve in the startup growth journey10, a critical moment that any successful business at some point has to go through – is going to be vastly harder than previous generations of startups. Web companies are no longer able to reach escape velocity and defend themselves outside of their niches through network effects, because of too much cross-competition.

Some data supports this view. Graduation rates are slowing down: the number of companies that raised a seed round and made it to Series A within two years halved between 2018 and 2022, going from 30% to 15%. Fewer funds in the most recent vintages have DPI, the median TVPI since 2021 is still below 1x, and the median IRR since 2021 still trails earlier vintages.11

Some may argue that it’s because of general market dynamics driven by the macro climate (unreasonably high valuation in 2021, followed by an unreasonably bearish market in 2022). There’s data to back this counterpoint too: the correlation between 5-year DPI and current IRR for 2005-2008 vintages is moderate at 0.4512, so these numbers going down may not be a good predictor of the final performances of the VC market today.

Nonetheless, my belief is these trends shouldn't be totally discounted. There’s some structural adjustment happening beyond the contingent effects of boom-burst micro cycles. Fund returners are necessarily harder to come by for an Internet-centric investment thesis. Their expected value has decreased, which may not be too bad for a boutique seed fund — there’s still enough room to return them — but it’s definitely less than ideal for larger funds, and thus for founders raising series A+ rounds.

If I can summarize the core of my argument in a nutshell, all I’m saying is that there’s no more alpha in knowing React anymore. At least, not enough to build true fund returners. It makes a great lifestyle business in case of a distribution advantage, or in small markets with specific idiosyncrasies, but I don't expect it to yield a 100x market return on a series A. This doesn’t imply that AI wrappers won’t beat the market under any circumstances, but it means that it’s not the scenario with the highest likelihood of happening. The expected value is packed around the market average, probably slightly skewed to the right due to a larger risk-taking appetite of small companies, but not dramatically larger. If I had to bet, I'd settle on an average +20% return on capital. Very good odds for individual entrepreneurs, but not great if you're in the VC line of work.

So where is the alpha today? Where's the 100x return on capital more likely to be?

That brings me to the two other types of companies I’m seeing being founded: AI infra, and full-stack AI.

AI infrastructure: high variance, positive mean

Many of the biggest winners of the dot-com era are companies that made the Internet possible — from accessing it (Netscape) to being able to safely pay on it (PayPal) to navigating through it (Google). In my mind, these are infrastructural companies, that were possible because at the time we were still trying to figure out tech-market fit. These companies had a fundamental thesis on what things could (should) look like years down the road and built the tools to make them possible. It was an amazing leap of faith.

We’re in a similar stage today with AI. It’s very hard to know for sure what kind of products we’ll see becoming possible in a few years, and thus, what infrastructure we’ll need. Will it be vector databases, like Chroma and Pinecone, because In Context Learning will be the dominant way to use LLMs? Or is it going to be more post-training fine-tuning, enabling companies like Modal to thrive? Not all will be binary choices, but many will. If most of the code will eventually be written by agents, with no human intervention, the right bet is infrastructure and data collection to improve the underlying model. But if code will stay hybrid and humans in the loop will keep having a strong role in writing it, it sounds smarter to just focus on a powerful editor while assuming the rest of the infra to stay a commodity.

AI infrastructure, in my mind, is a very broad category that goes beyond just “tools for LLMs to use”. For instance, I’ve heard from a few founders the argument “Education will be impacted by AI: we’re building tools for what the post-AI Education world will look like, so we can overcome the structural problems of this market”. That too is AI infrastructure in my mind — specifically, infra for _education _AI.

The alpha for these companies is not necessarily the technology. They can very well be just React web apps all built by low-tech founders with a keen product sense, capable of imagining a different world. The low consensus stems from having a strong opinion on what the post-AI world will look like, and being willing to bet on it. Being willing to make a large concentrated bet on a pure prediction with little to no information advantage will necessarily yield an outsized return… if the bet is right.

Although I see the expected value of these companies to be positive, my sense is that its variance will be high compared to the last startup era. Their outcome will be larger, but the mortality rate will also be larger — exactly like during the dot-com bubble.

I expect some heat for this, but here’s my hot take: I don’t think a founder should pick this category if they can avoid it. It feels like gambling, playing loose before the flop hoping in a good hand. Nobody knows what the market needs even just a couple of years down the line, and being willing to bet an entire company on something you can’t do anything about feels unnecessarily risky. Founders have little power over the final outcome: they could be very right, but could be very wrong, and there’s little about either within their control. In 2023 many thought that GPT-4 performance was going to be a persistent technical advantage, and many labs were founded off of that premise: the actually useful large models will be an oligopoly. But now we have at least a dozen GPT-4-level models, some of them even open source; as it turns out, that moat was not that deep. Many infra companies were built on the premise that context must be carefully managed with embeddings, but we’re now beginning to see multi-million context-length models with reasonable inference prices, rendering them useless. Success will boil down to the specific shape of the market demand in the future. It’s a very peculiar category because the market is already forming (“AI”), so it’s not entirely a green field where you can be a master of your fate, but the actual idiosyncratic implementations are still undefined, increasing the actual demand risk.

Since the expected value is positive, however, for funds it’s a different story. I fully expect VCs betting on this category to be very successful, because of the asymmetry of risk between founders and investors: the latter can afford to take multiple uncorrelated bets. Spray-and-pray will work, but picking the winner in this category will be much harder than in previous eras.

Going full-stack

The companies I’m most fond of are those willing to go full-stack, controlling the whole data feedback loop and taking more tech risk with it. It feels like the ideal case, both for founders as well as for investors.

It’s obvious to everyone that the defining technical trend of the next decades is going to be scalable intelligence. I also believe that we’re approaching the limit of what’s possible to develop given freely scrapable Internet information, and that beyond this point, we need more niche data to push forward the frontier of AI models. We’re data-capped.

There are two main philosophies on how to find more (defensible) training data, and both imply being willing to assume more technical risk.

The first is to collect that data with products, via users’ behavior. Being data-capped creates an opportunity for those startups that can build a product capable of collecting training sets that no one else can access, and that can use that data to develop proprietary AI models.

That’s why I’ve been significantly more bullish on Anthropic than OpenAI: the former is much more disciplined in its product strategy. That’s also the reason why Midjourney is so far ahead of any other competitor, despite fierce competition: their Discord community guaranteed them unrivaled post-training fine-tuning13, which ensured a data flywheel via preference optimization.

Smaller companies can still compete by focusing on narrower use cases. The data that they need to collect needs to either unlock a fundamentally new capability that is not otherwise available with general models, or to make something already possible but not economically feasible orders of magnitude faster and cheaper. It’s a strict set of constraints. Most products are just fine exclusively using large proprietary models already, making it hard to build a defensible moat by just improving that baseline. It’s a tight path, but it’s possible, especially in post-train.14

These startups will need to make AI a fundamental part of their core competencies — otherwise, how would you use the data that creates the moat? But also, they’ll likely need to hire specialized talent with vertical domain knowledge too, otherwise it’s tricky to fully leverage the advantage. Right now there’s an overflow of codegen tools because code vertical knowledge is abundant among AI practitioners (it’s all very meta). It will start to be interesting when we see data flywheels in other verticals too — assuming they exist and they don’t simply belong to the previous category, where foundational models are already good enough.

The resources devoted to research on how to best use custom models in their product will be significantly larger than a traditional startup. These companies will take on more tech risk, on top of the usual market adoption risk. The “research” function will be more pronounced. They’ll all have some kind of internal lab driving the long-term defensible strategy.

There is a second philosophy to address the data cap: users are not needed anymore. All the data we need to build AGI is already available within the output distribution of existing LLMs, and it’s more a matter of having a feedback loop that gets you there, free from users altogether. I know at least one big lab that is pursuing this theory, but it’s specifically focused on coding. The reason why coding lends itself well to this path is that it’s fairly easy to independently test whether the output works or not without the need for human feedback (in the form of users).

Although there might not be an explicit data flywheel in this product-free model, there’s still the idea of making a big technical bet — be it in synthetic data generation, pre-train long context windows, leveraging new architectures, or new training processes. All imply lots of time and lots of money to see the bet through, which gives these companies a long first-mover advantage to kick off other forms of network effects before the competition arrives.

This is the most extreme technical version of “I know something technical that you don’t” for software startups.

But it’s not the deepest you can get in the technical risk scale.

A special case: hardest difficulty, highest payoff

I expect the true market crushers of the next two decades to be companies that are willing to venture into doing something that few would bet their professional lives on: applying the data flywheel to the world of atoms. That can range from defense tech, to biotech, from space tech, to robotics, from consumer hardware, to anything in between.

While moving away from a pure software play still has the lowest level of consensus in the startup ecosystem, which still implies a relatively low level of competition, it also gives access to the least accessible data on the market today to develop powerful deep models (not necessarily language ones). Some things happening in the physical world are simply not part of Internet-scrapable data. This creates the opportunity to build very defensible businesses, with all the time needed to dig a moat before competitors begin to catch up — which cannot be said for their pure software equivalent. And some might argue we can’t escape atoms if we want to build AGI.15

There are not many success cases I can mention to support my argument: these companies are being built right now. It will take many years to see them flourish and thrive. I don’t think it’s a coincidence, for instance, that some pure software companies like Midjourney seem to be getting ready to make a consumer hardware play.16 Conceptually, the archetypical company that I think will win the biggest will look like Waymo. Hardware-first, ultra-high capex, more than a decade of R&D and deep technical work before seeing the light at the end of the tunnel, but an incredibly defensible position that will be very hard to attack. And the moat is not simply technical. It’s a data advantage too, as they are not a car company, they’re an AI company that is constantly harvesting data and improving their models. Physical companies applying AI to their verticals will be a marvel to witness, and at the risk of being proven completely wrong, I don’t think they run the risk of being disrupted in a hypothetical post-AGI world.

The funding dynamic of these “extreme-technical-bets” will be different, though.

Their capital intensity is nothing like software, it’s a qualitatively different game, and that makes fundraising different too. Waymo was incubated inside Google. OpenAI, the last successful speculative technical bet (some would say also a hardware one!, on GPUs), couldn’t happen without Microsoft. Varda Space happened because of the direct will of Founders Fund. Tesla and SpaceX wouldn't have happened without a hundreds-of-millions-dollars concentrated bet made by one guy. Some founders decide to be acquired early by an industrial partner to continue their journey (the story of Cruise). Another way is to partner with the government to subsidize their extreme technical risk (like some defense tech companies, or to a certain extent Tesla too). But the bottom line is that funding doesn’t resemble anything like the factory model we’ve been used to so far.

So… where are we going?

It’s clear to me, and to pretty much everyone at this point, that purely Internet-based startups are approaching a saturation point. Their peculiarities (proven tech-market fit, a growth challenge to figure out, large portions of the existing markets to grab and consolidate) led to specific playbooks that have worked well so far. I believe that being willing to challenge those playbooks will bring the largest market returns, and will eventually bring us to a different kind of equilibrium.

As we’re converging to a new balance, new breeds of startups are supplanting the currently dominant species, and are all focusing on a bet that currently has low consensus: being willing to take on a lot more tech risk. The new startup era we’re walking towards will be heavier on technology than the one we’re leaving behind. We’re going to see longer incubation periods, and likely higher capital intensity to fund ultra-specialized research that won’t be easily commoditized just yet. Investors will necessarily need to be comfortable with tech slides more than market ones, and to do more scientific due diligence than growth one.

Directionally, the bulk of returns will move from the Andrew Chen-types17, to the Delian Asparouhov and Nat Friedman18 ones: investors comfortable with reading preprint papers and making bets on immature technologies, rather than on growth loops and retention cohorts of pure Internet products.

More opportunities are opening up, but they probably won’t be easy to seize for most people. Success – more than ever – is going to be unevenly distributed.

We’re witnessing the dawn of a new startup era.

• • •